All articles

LendingTree Sets New CX Standard For Trustworthy AI In Financial Services

In regulated finance, trust is the product. LendingTree’s Chris Hall explains how disciplined AI governance determines when agents are ready to take on greater customer-facing responsibility.

Key Points

In financial services, AI mistakes carry real consequences, and even small calculation errors can erode customer trust during high stakes decisions.

Chris Hall, Director of Product at LendingTree, explains how the company treats trust as the core of customer experience when expanding AI in lending journeys.

LendingTree expands AI authority only after human review, strict accuracy testing, and automated risk checks confirm it is ready for customer-facing use.

Agents are an exciting vision for the future, but they’re very different in financial products. There’s a tremendous amount of risk, and in financial markets, trust is the product.

In regulated financial services, AI agents face a different kind of pressure test. Businesses across industries are committing to AI agents as a long-term strategy, and major institutions like JPMorgan have appointed dedicated AI strategy leads to accelerate the push. But in financial services, that momentum collides with legal exposure, compliance requirements, and consumers trusting a platform with some of the most sensitive decisions of their lives. A gap is widening between what AI can technically generate and what customers can confidently rely on, and the companies closing it are building governance systems designed to protect the customer experience, not just the balance sheet.

Chris Hall, Director of Product at LendingTree, leads AI product innovation at one of the country's most recognized financial marketplace platforms, where consumers compare rates and options across mortgage, auto, credit, and personal loan products. Hall joined LendingTree following a tenure at CarMax, where he was part of the founding product organization. His work sits at the intersection of consumer experience and the practical limits of autonomous AI in a compliance-heavy environment.

“Agents are an exciting vision for the future, but they’re very different in financial products,” Hall says. “There’s a tremendous amount of risk, and in financial markets, trust is the product.” In practice, that means AI cannot simply be helpful. It has to be dependable in moments when customers are weighing decisions that affect their savings, debt, and long-term stability. Hall’s team prioritizes applications that guide consumers through complex choices and answer questions in context, supporting the shopping journey rather than attempting to replace it.

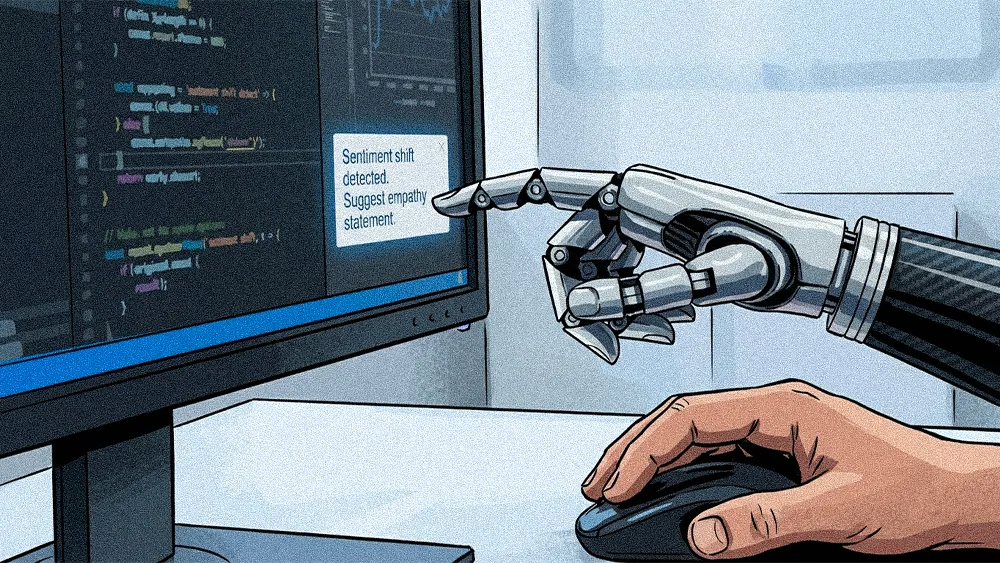

That approach works well for informational guidance, but the experience changes the moment precise calculations enter the conversation. When agents are asked about mortgage rates or monthly payment estimates, even small inaccuracies can distort a customer’s understanding of affordability. Because those errors carry real consequences for decision confidence, Hall’s team has put strict controls in place, building around AI agent observability instead of the faster “launch and learn” model common in lower-stakes environments. In financial services, protecting the integrity of the interaction is part of protecting the relationship.

Math is hard: Numerical accuracy is not just a technical requirement in financial services. It is a customer experience threshold. A payment estimate shapes how a consumer evaluates affordability, timelines, and trade-offs. “We have very tight control over the agents we develop to prevent them from hallucinating, making up inaccurate financial information, or providing inaccurate calculations,” Hall explains.

The human firewall: Before any AI-generated response reaches a consumer, it moves through multiple checkpoints designed to protect the integrity of the interaction. “Observability is everything for us. Every output of an agent under testing gets reviewed by a human and also gets run through a system to evaluate potential risk,” says Hall. That layered review process gives the team space to catch errors before they influence real financial decisions. In practice, it creates an invisible safety net that allows the customer experience to feel seamless while remaining tightly controlled behind the scenes.

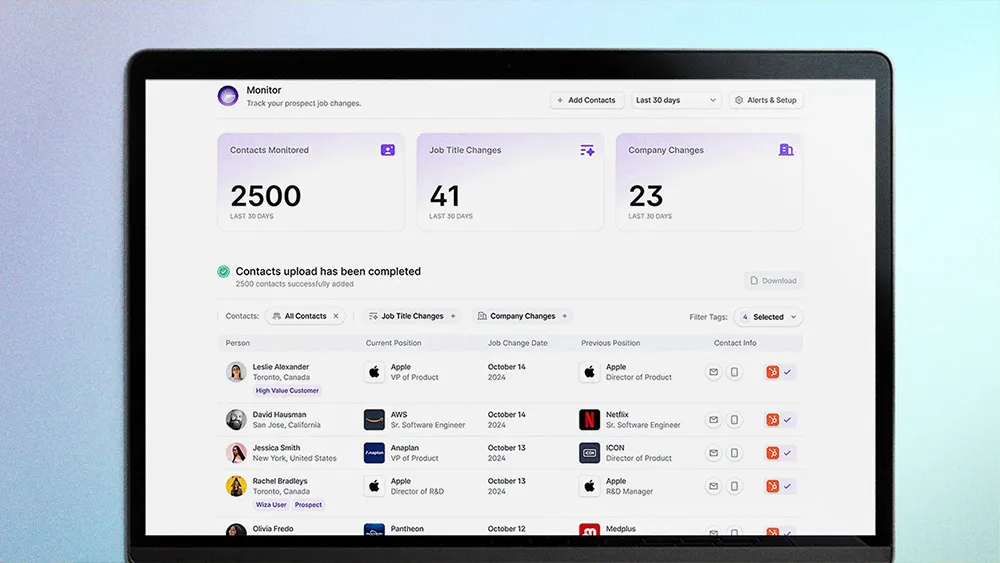

In a market where underlying models and their capabilities change by the week, Hall avoids long-horizon forecasting. His team tests agents in months-long sprints, and any expansion of agent authority requires passing a three-gate readiness test. First, human reviewers must consistently stop finding errors. Next, the agent must handle more complex questions accurately in controlled testing environments. Lastly, it must clear a growing suite of automated evaluations that cover calculations and edge cases. Only when all three gates are clear does the team consider expanding what the agent is allowed to say to customers and in which contexts. It is a framework designed not just to manage nondeterministic LLMs, but to ensure that each new capability strengthens, rather than undermines, the customer experience.

Bot catches bot: As that framework scales, the review process has to scale with it. "The best approach I’ve seen in research is to use other LLMs to evaluate the output of your agent. It's a little meta," notes Hall, "but if it works well, that gives you the ability to scale. We haven't tested this yet, but it's on our roadmap."

Playing the long game: This discipline does more than protect against errors, it earns the AI agent the right to solve harder consumer problems. For Hall, the north star is a consumer navigating something as involved as a six-month mortgage search, which demands sustained guidance rather than a single interaction. "For consumers who have a research question, like shopping for a mortgage, agents can provide a lot of context and support," he says. "That ability to guide shoppers over time to make the best decision possible seems like it could be a big unlock."

Consumer sentiment around that vision is, as Hall puts it, a mixed bag. Some users approach AI-assisted guidance with skepticism, especially when financial stakes are high. Others increasingly expect intelligent support to be built into the experience. For LendingTree, that range of expectations turns trust into a design requirement. The company’s long investment in data security and brand credibility becomes part of how it introduces AI into the journey, meeting customers at their comfort level rather than forcing adoption.

The regulatory environment for AI in financial services is convoluted, with state laws rolling out continuously and federal preemption still unsettled. For customers, that instability can translate into uncertainty about how their data is handled and how decisions are made. That is why internal rigor matters beyond compliance. It creates consistency in the experience, even when the legal landscape shifts. “Laws are changing every single day,” he says. “It’s important to be aware of that, and to have the internal controls in place to move with it.” In practice, that discipline helps ensure that customer trust does not fluctuate with policy headlines.