All articles

Customer Success Leaders Turn to Missteps as Their Most Valuable Source of Insight

Vasif Abilov, Sales Operations Program Manager at Amazon, explains how urgency, honesty, and human diagnosis build stronger customer trust.

Key Points

Customer success teams often overlook the small problems and low review scores that reveal when trust is slipping.

Vasif Abilov, a Sales Operations Program Manager at Amazon, explains why urgency, transparency, and human diagnosis matter more than perfect satisfaction scores.

He shows how teams strengthen loyalty by learning from breakdowns, owning mistakes, and implementing AI in ways that support rather than replace human judgment.

People brag about getting a 95% satisfaction score, but the 4% giving you one or two stars is what companies should focus on. Those one-star and two-star reviews are actually more valuable than all the five-star ones because they point directly to a system or process that's broken.

Some customer success leaders have stopped chasing spotless scorecards and started studying where things break. Instead of treating mistakes as liabilities to be buried, they treat them as fuel for better systems and stronger relationships. In this view, the metric that matters most isn't the success rate, but how quickly teams learn from what goes wrong.

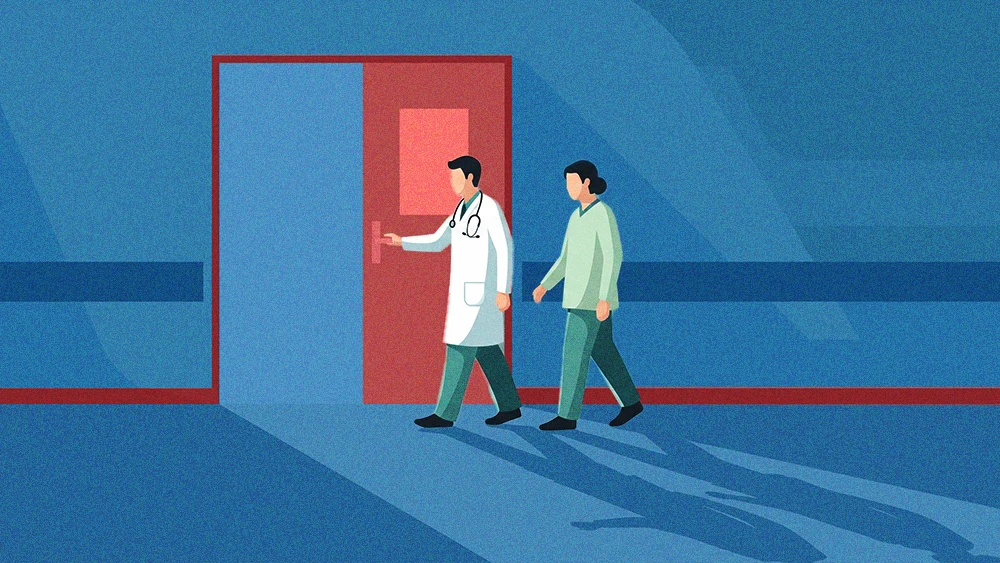

Vasif Abilov, a Sales Operations Program Manager at Amazon, has spent years across Amazon and AWS watching customers hit small roadblocks that feel like full-blown emergencies. Those moments taught him that the fastest route to trust rarely comes from flawless execution. It comes from how well you diagnose the real problem, how honestly you own the miss, and how quickly you turn a stumble into clarity.

"People brag about getting a 95% satisfaction score, but the 4% giving you one or two stars is what companies should focus on. Those one-star and two-star reviews are actually more valuable than all the five-star ones because they point directly to a system or process that's broken," says Abilov. Those low scores tell the truth faster than any glowing review, and in his view they are the clearest map to what needs fixing.

Sound the alarm: "Companies should build a culture that celebrates their biggest failures," he explains. "When you talk through a failure step by step, you create an alarm system for other teams and a real opportunity for improvement. It might feel embarrassing, but bringing that conversation to senior leadership is what helps the whole team reach a higher level of success."

When systemic issues arise, the philosophy shifts to a disciplined protocol Abilov learned at AWS. At the heart of his 'fix first, explain later' method is a commitment to direct, blame-accepting transparency.

Call the fire brigade: "You have to be able to grab the red phone and put the fire out before you fix the infrastructure. If customers are experiencing a systematic error, you calm them down, fix their immediate problem, and then you go in to fix the systems," advises Abilov.

Blame game: "Be open, transparent, and admit you messed up. We would tell them directly, 'Look, we messed this up. This is on us,'" he says. "Every customer has their own customers, so they understand. If you explain the impact, outline the fix, and follow up, you build trust. Under-promise and over-deliver."

Preventing the kinds of problems that require this 'fire-fighting' approach means leaders must learn to interpret the subtle signals of eroding loyalty. Abilov identified two primary patterns that leaders should watch for: the customer who gets louder with repeated, unresolved issues, and the one who goes silent. These behaviors tell the human story behind the data, and that data can hold the key to proactive improvement.

Waste no time: He argues that most customer relationships hinge on one simple dynamic: how seriously you treat their most immediate problem. "Urgency over everything is what I've noticed. They have an issue that's worth a dollar, but it's so urgent for them their world stops. The moment you resolve it, you become their best friend. Then they come up with a million-dollar question down the line," Abilov says.

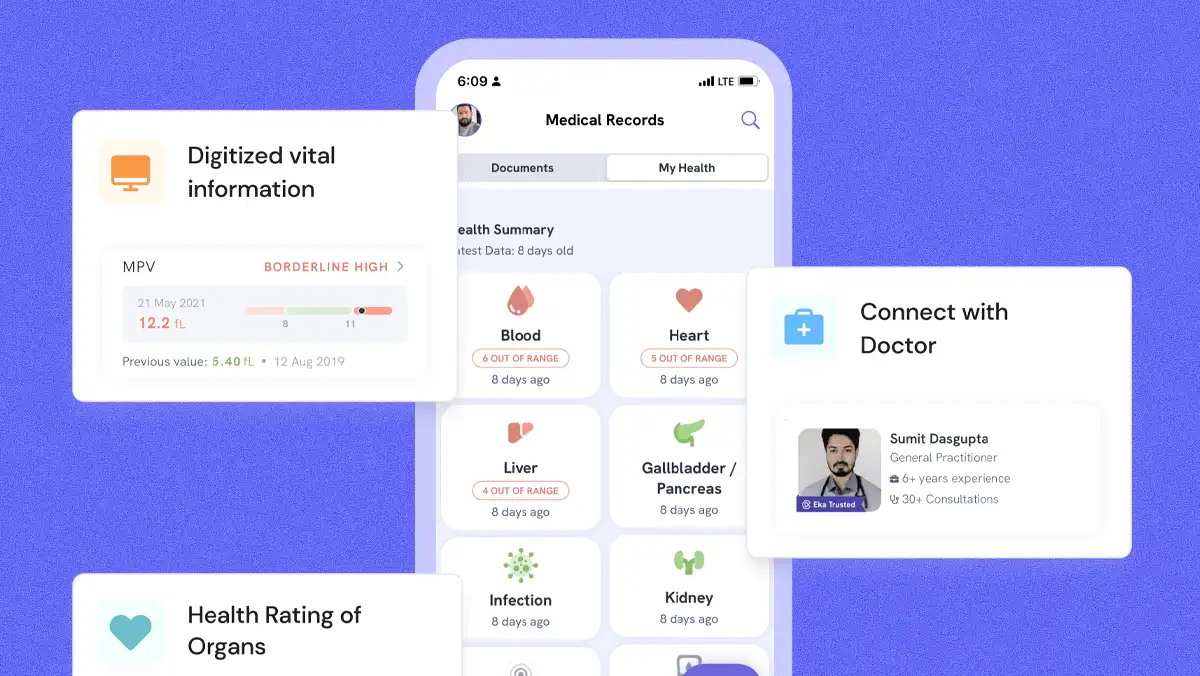

The human diagnostician: Abilov’s philosophy rests on a practical understanding of technology's limits. Before any long-term trust can be built, the customer's immediate pain must be solved by a human capable of true diagnosis, he says. "AI prepares automated answers, but the human factor is what creates a satisfied customer. You have to understand whether the problem they think they have is the one that actually exists. That ability to find the true root cause is something AI still struggles to do well."

Ultimately, the biggest risk of AI adoption may not be the technology itself, but its clumsy implementation. "For an urgent issue, a customer doesn't want to talk to a robot," he says, explaining a common frustration. This suggests the critical takeaway for leadership is that how you implement AI is often more important than the fact that you do. "Selecting and implementing those tools correctly is what matters the most at this point," he concludes.