All articles

Ex-Five9 CMO Calls for 'AIOps' to Solve the AI Trust Problem

Learn how Five9 CMO Niki Hall's AIOps framework enables trusted agentic AI to get things done, addressing critical data privacy concerns for CX.

Key Points

As enterprise AI shifts from simply answering questions to actively getting things done, building trust in its performance is a key challenge.

Niki Hall, former Chief Marketing Officer at Five9, explains how a new "AIOps" governance function can ensure AI reliability.

This approach supports AI in roles like "The Fixer" and prepares businesses for critical data privacy considerations as systems integrate.

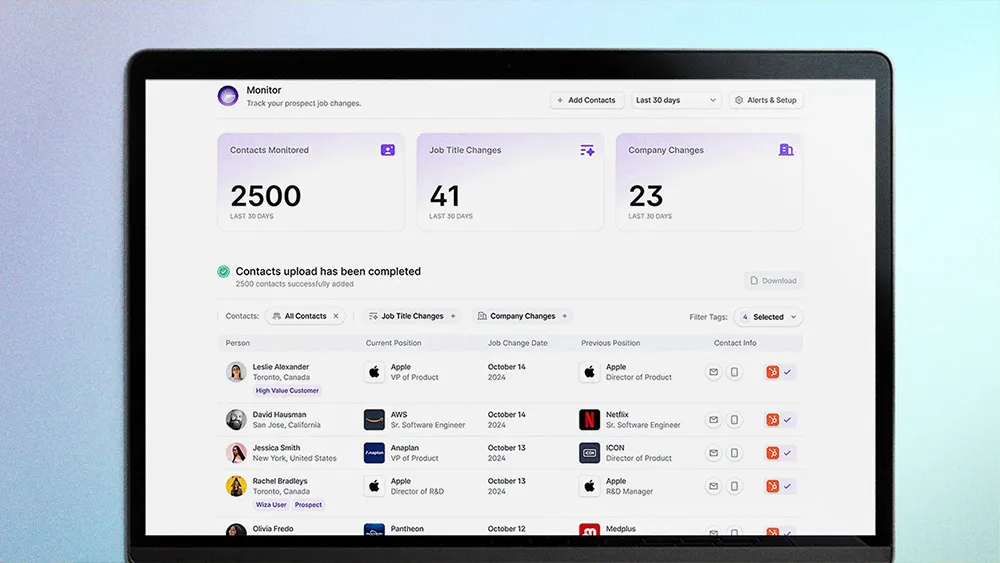

Many leaders are ready to adopt AI, but first they need visibility into its performance and the governance to control it. They need to see if it's pulling from the right content or hallucinating, and then have the ability to fine-tune it. That's where AIOps comes in.

For the past few years, enterprise AI has been focused on building "answer engines." But the real frontier is about getting things done before the customer even asks. The move from passive answers to proactive action is powered by agentic AI, and a leading marketing executive has a clear framework for how to implement it.

That executive is Niki Hall, the former Chief Marketing Officer of Five9 and a fractional marketing leader. A performance-driven executive with over two decades of experience at high-tech companies like Cisco and Polycom, she is a member of the Contact Center Week (CCW) Board and the Forbes Communications Council. Hall points to what she sees as a fundamental hurdle for adoption: trust.

"At a recent CCW board meeting, a leader from a major brand articulated a core challenge, asking why they should use AI now when they have a hundred years of experience training humans who they know work. Many of those same leaders are ready to adopt AI, but first they need visibility into its performance and the governance to control it. They need to see if it's pulling from the right content or hallucinating, and then have the ability to fine-tune it," Hall says. Her solution? A new governance function she calls "AIOps" (AI Operations).

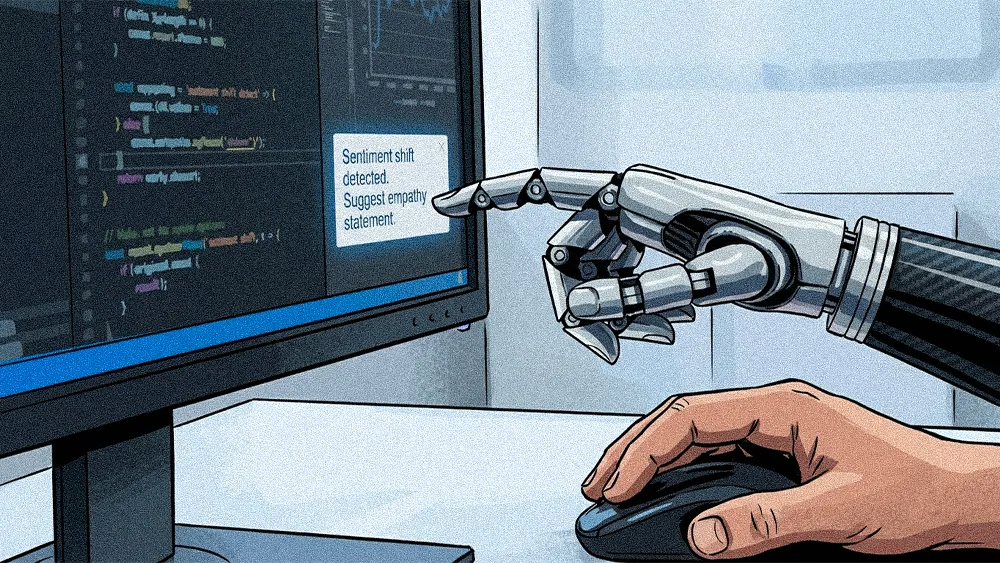

AIOps acts as a dedicated operational layer to monitor AI performance and make sure it adheres to the right content, preventing the "hallucinations" that contribute to leader skepticism. Hall breaks this governed AI down into three distinct roles it can play for the customer.

Fix it: First is "The Fixer," an AI that proactively solves problems. This type of AI is the engine that turns a flight delay into an automatic rebooking or makes changing a dinner reservation feel entirely natural. Hall explains: "I'm usually the first to give up and demand a human, shouting 'Agent! Agent! Agent!' But the AI I interacted with to change a reservation was different. It felt natural, not robotic, and made the change seamlessly. It confirmed the requested change, and after my approval, it delivered: 'Okay. Great. Done.'"

Work with me: Next is "The Co-Pilot," which guides customers through multi-step processes like financial onboarding. It populates forms, translates jargon, and assists step-by-step. "When a truly complex issue gets escalated to a human agent, they are equipped with AI summaries. So they have a summary of everything that was discussed leading up to this. That's how AI can help augment the human, freeing them to focus on the high-value, complex problems where they are needed most," she outlines.

On the lookout: Finally, "The Scout" acts as a hyper-personalization engine. Moving beyond simple AI-driven recommendations, it curates options based on a user's true preferences and behavior, acting like a personal shopper who understands their vibe. "I have a CMO GPT, I have a mom one, I have a cooking one. I have different GPTs depending upon what persona I'm using. Brands can do this, too," she says.

Hall suggests that this natural experience is best achieved through a phased "crawl, walk, run" implementation, in which organizations test new ideas in a de-risked environment. "You should begin by testing this technology in a small, low-risk use case. At an airline, for instance, you wouldn't test a new agentic AI on your 'Global Services' members. That's the crème de la crème. You start with the 'bronze' tier customers, who travel less frequently. The stakes are simply not as high if they receive the wrong information, compared to your top-tier members who are traveling weekly," she advises.

As this technical integration becomes more common, it introduces a new layer to the conversation. Hall points to developments from major tech players like Salesforce, which have announced plans to deeply integrate AI into core platforms like their Service Cloud. For her, this means the definition of governance will need to expand. While it currently focuses on preventing performance errors, it will soon need to encompass data privacy. "My biggest concern is the coming challenge of data privacy. As personal and corporate systems begin to integrate, how does all that personal data not get into a corporate data cloud? What if it's a credit card? PII? The next frontier of governance isn't just about AI performance; it's about the fundamental security of that data."